Product

B2B

Sponsored by

Adobe

Solve for fatigue in AI agent of enterprise software

Overview

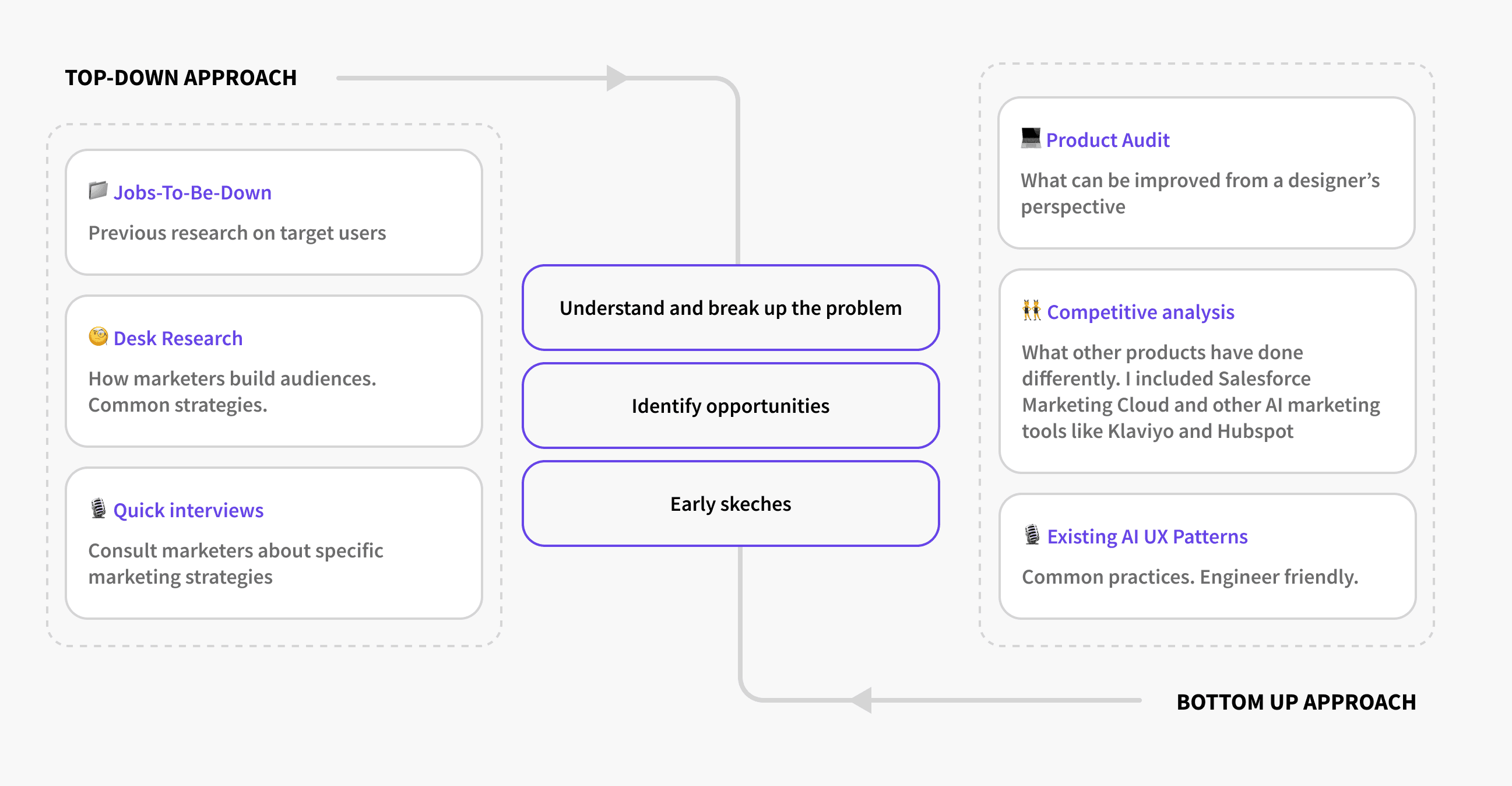

In 2025, I was excited to work with Adobe's enterprise product team in the design studio course. I spent four months reimagining a more nimble human-AI collaboration experience for Audience Builder.

01 Context

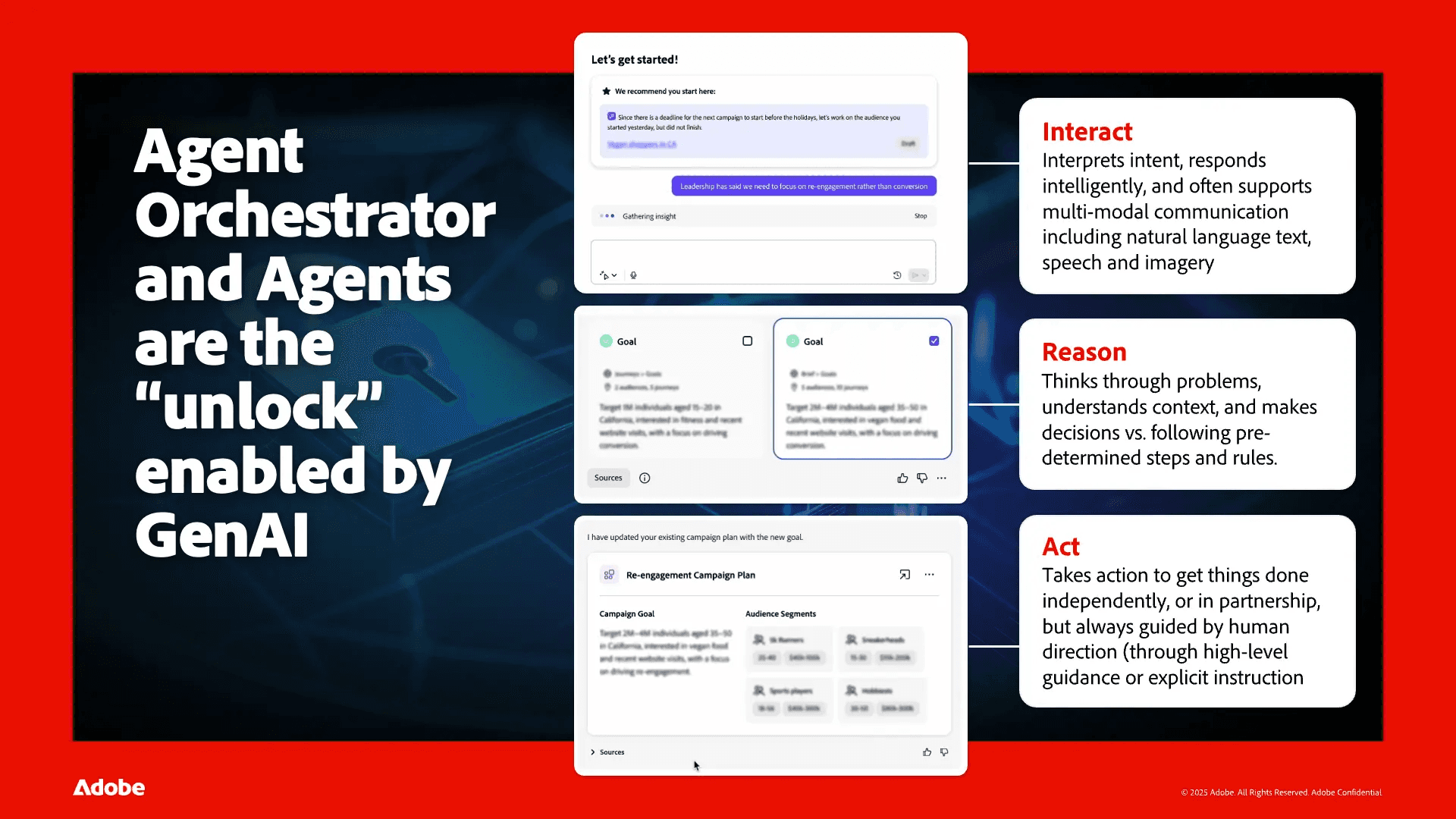

Adobe released its AI agents in the Adobe Experience Platform (AEP), which is a B2B software help marketers unify customer data and support campaign launch.

02 Problem

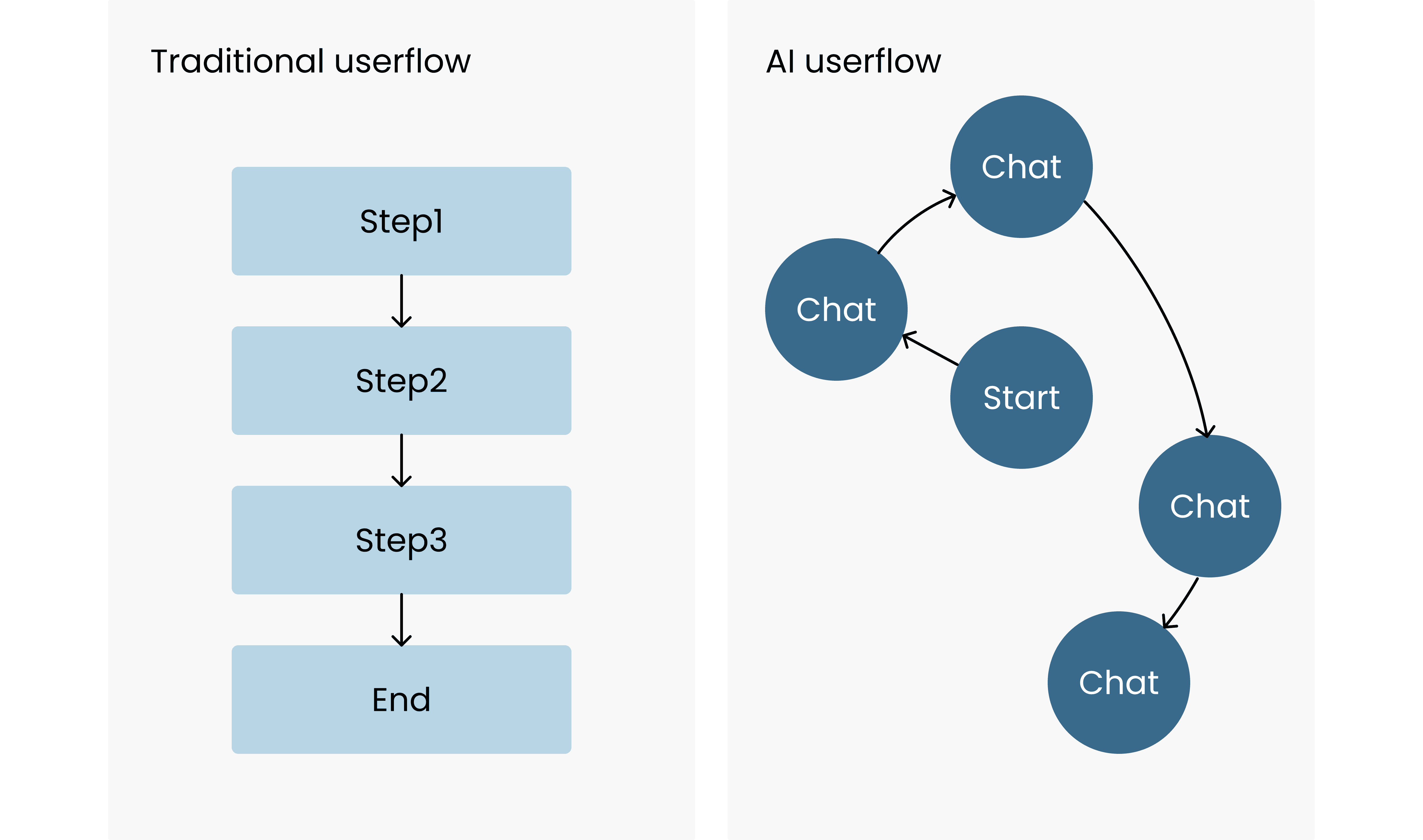

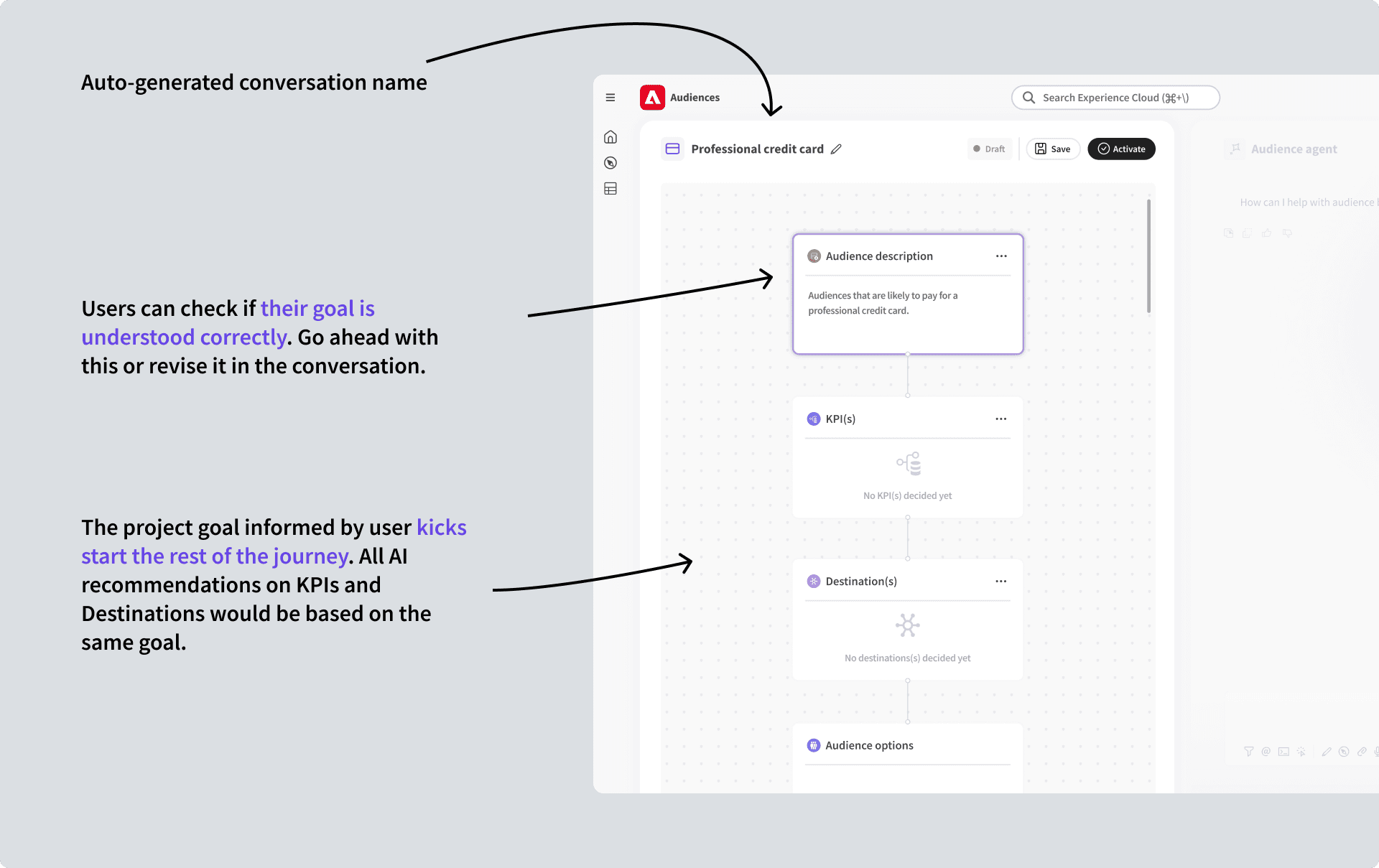

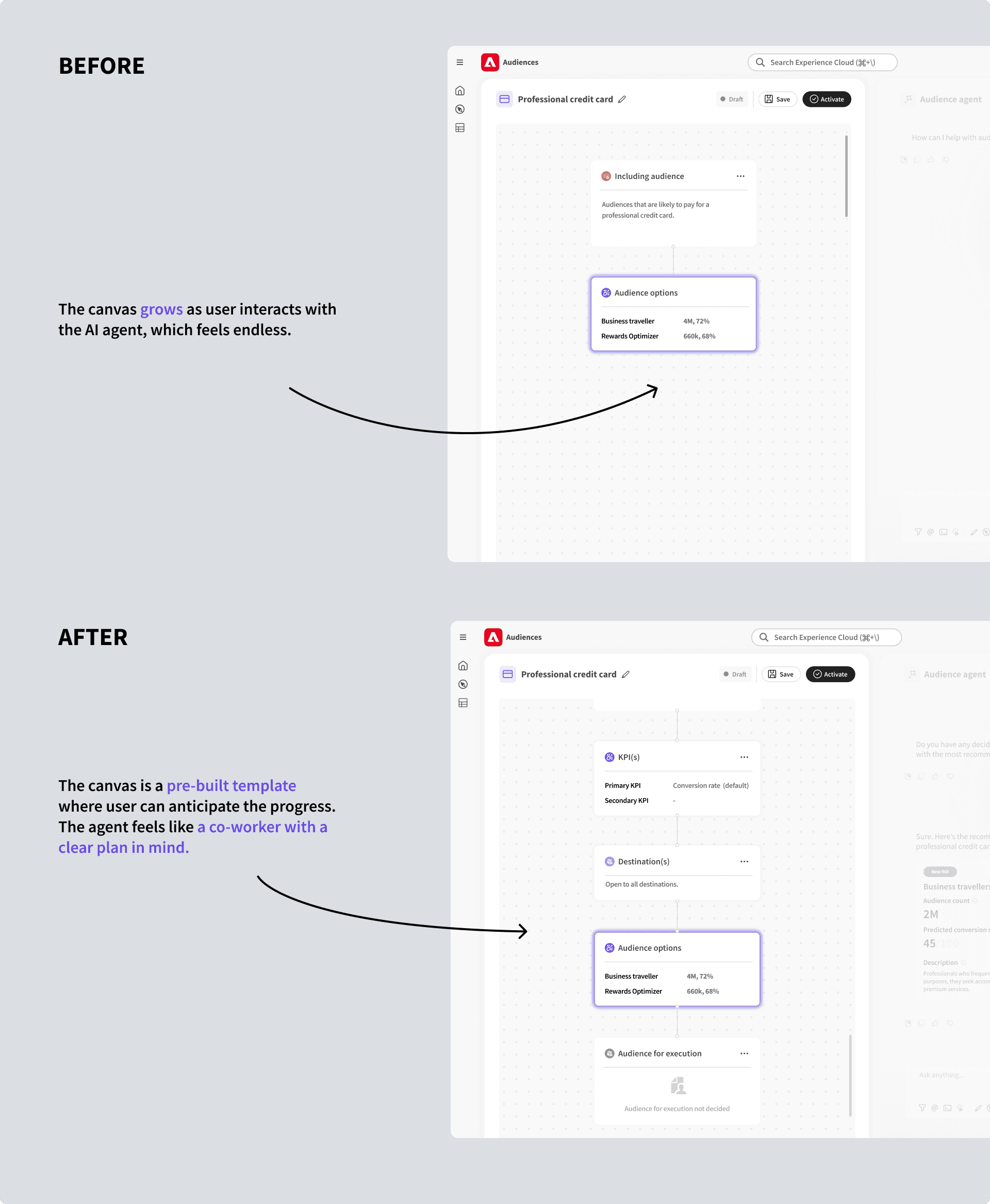

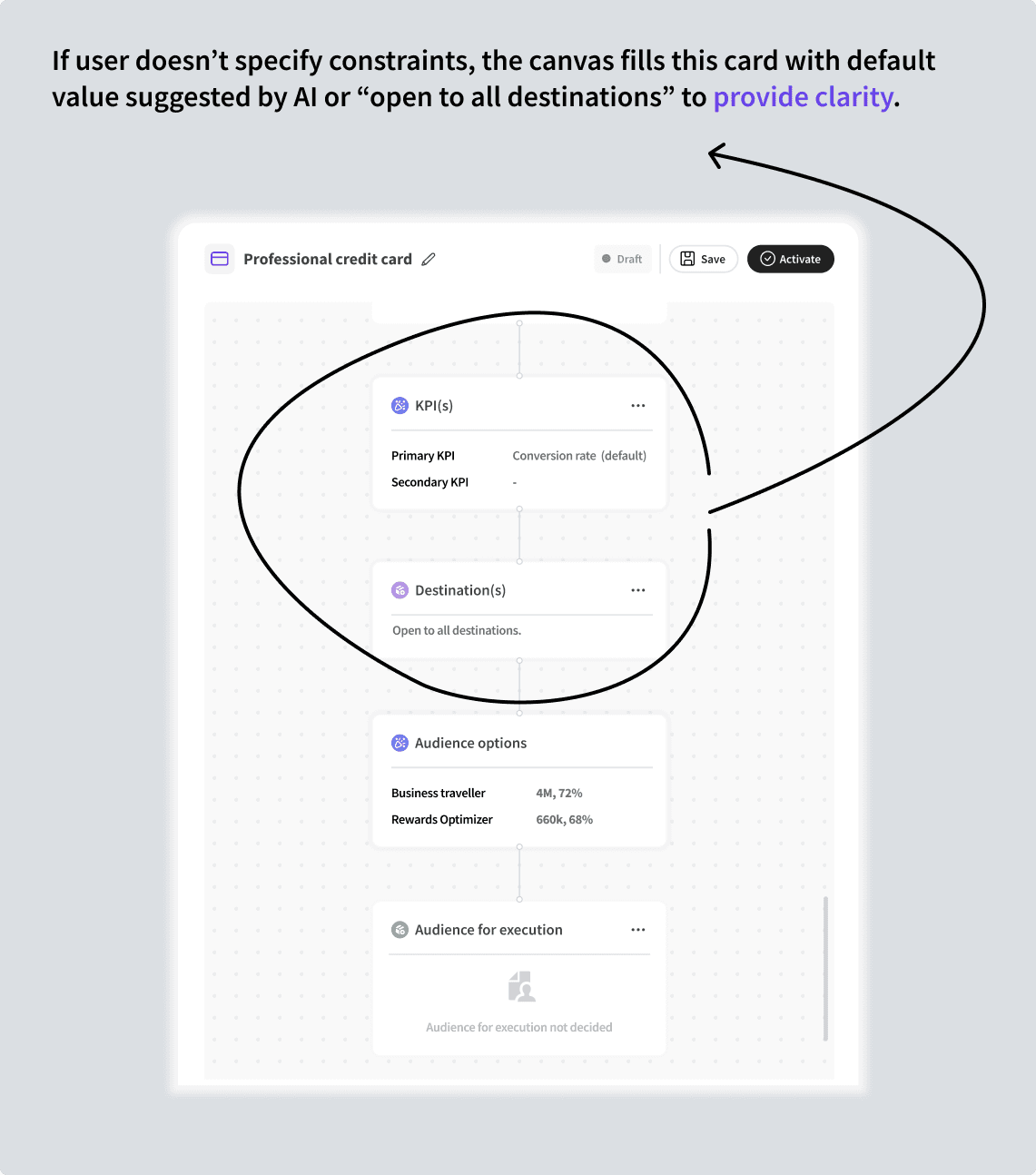

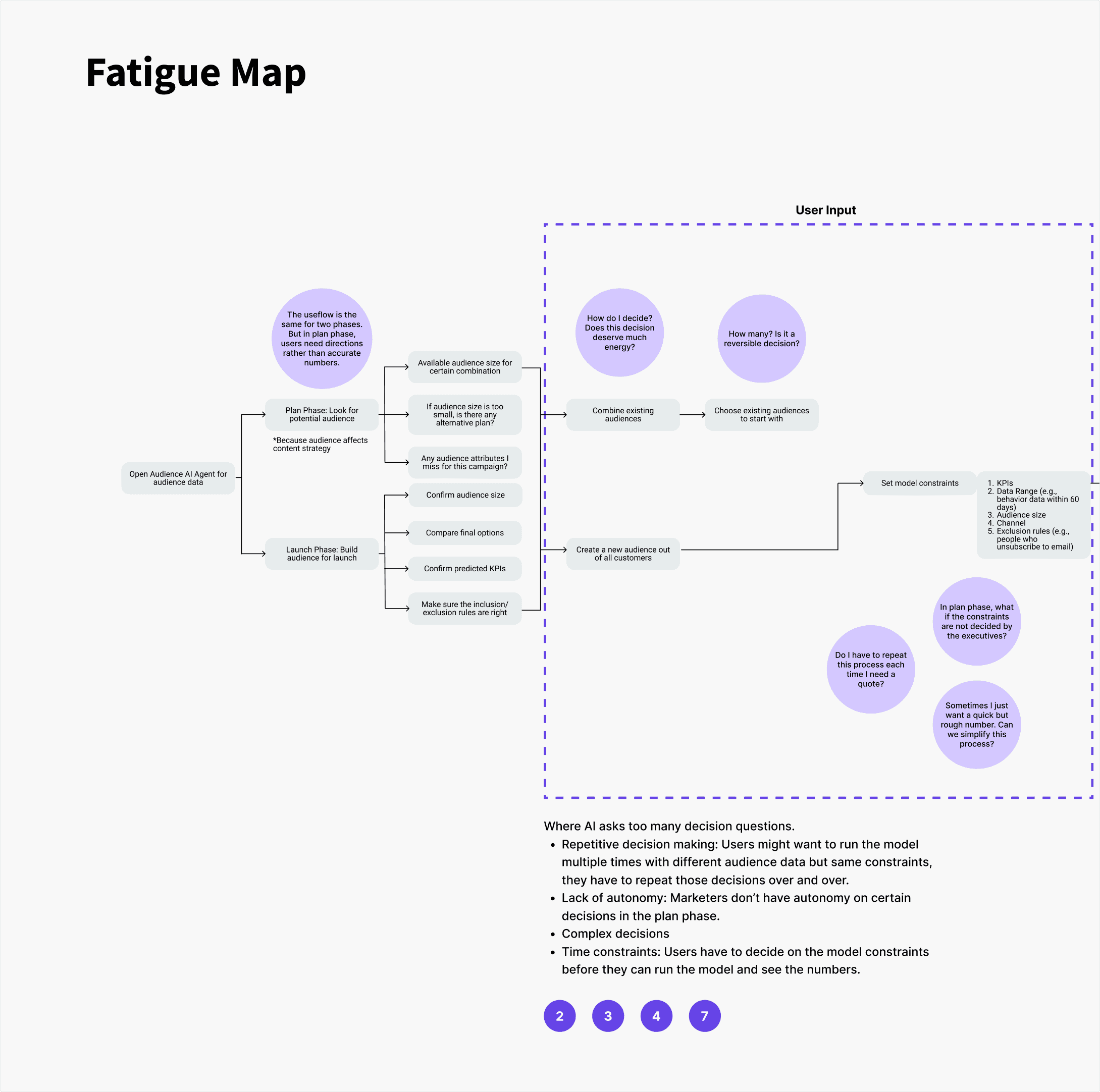

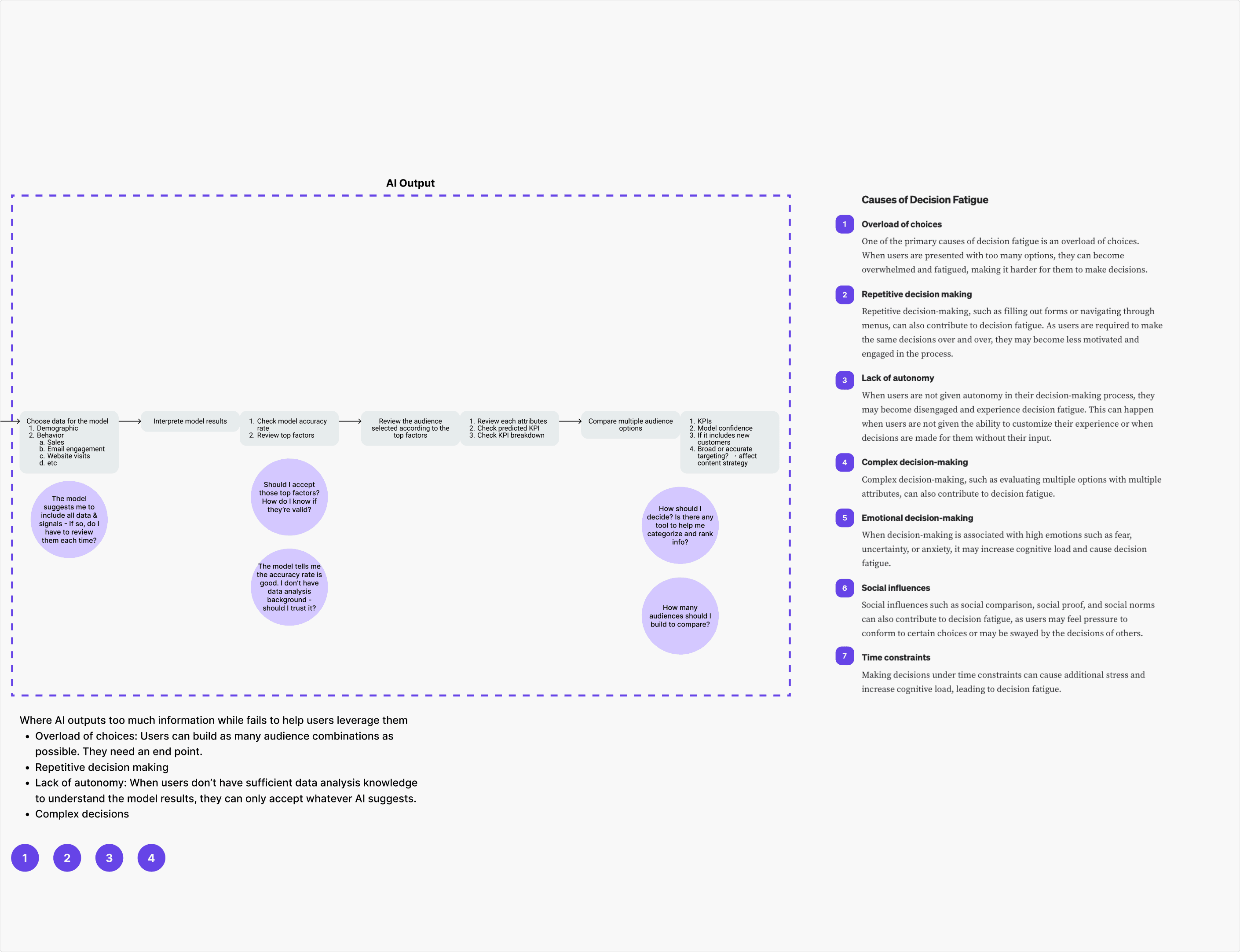

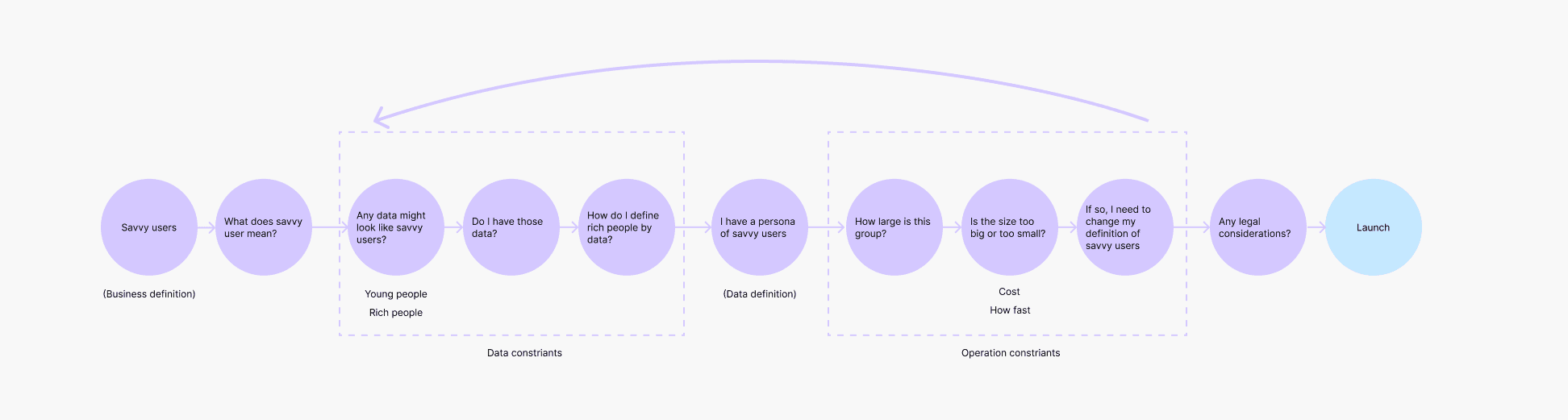

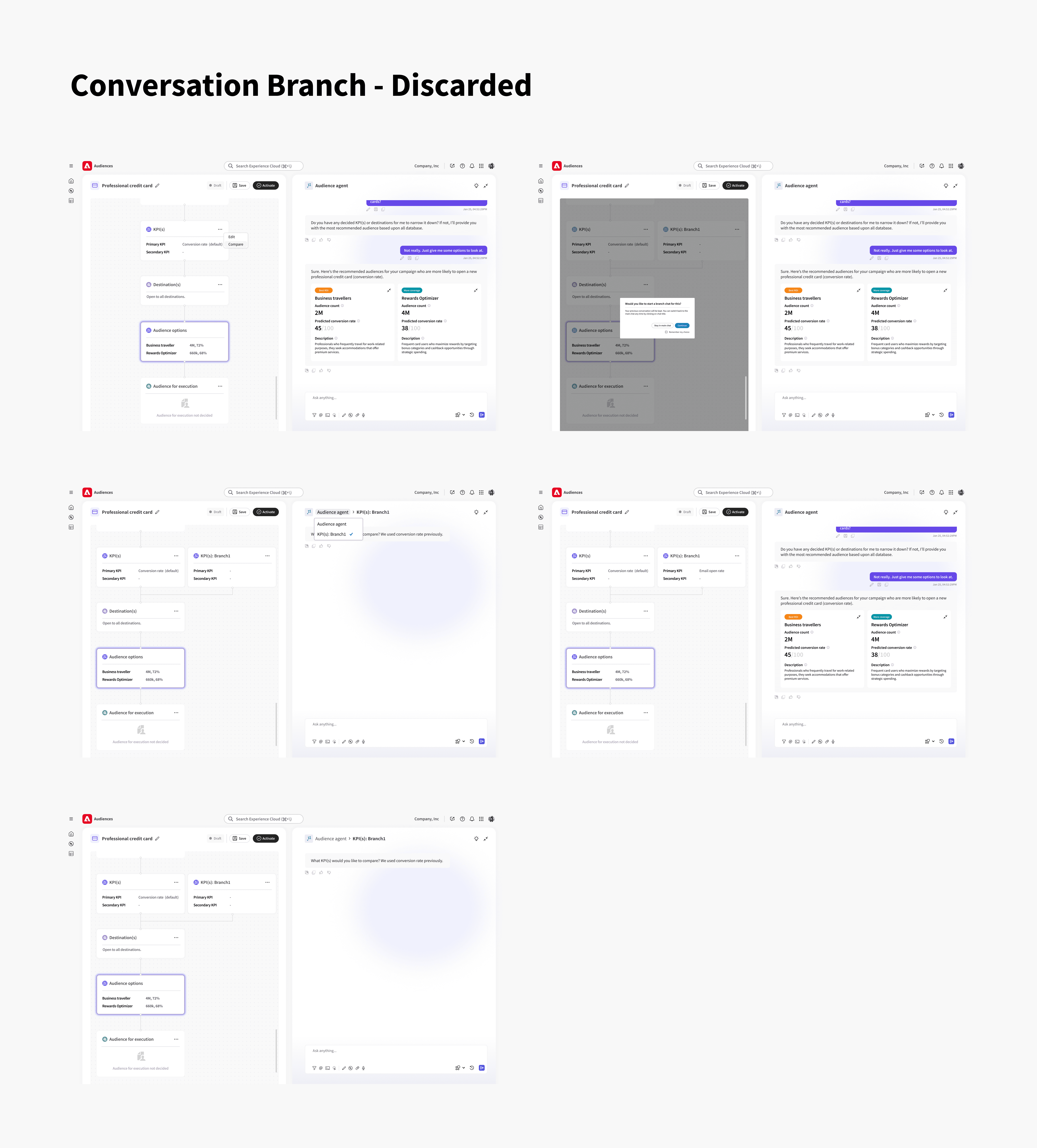

While AI agents actively provide suggestions/recommendations, users complaint that the Human-AI interaction experience in Audience Builder felt like a "rabbit hole". Unlike linear and predictable traditional interfaces, AI chat flow feels infinite and then leads to loss of control.

Insights from user research

Endless conversation:

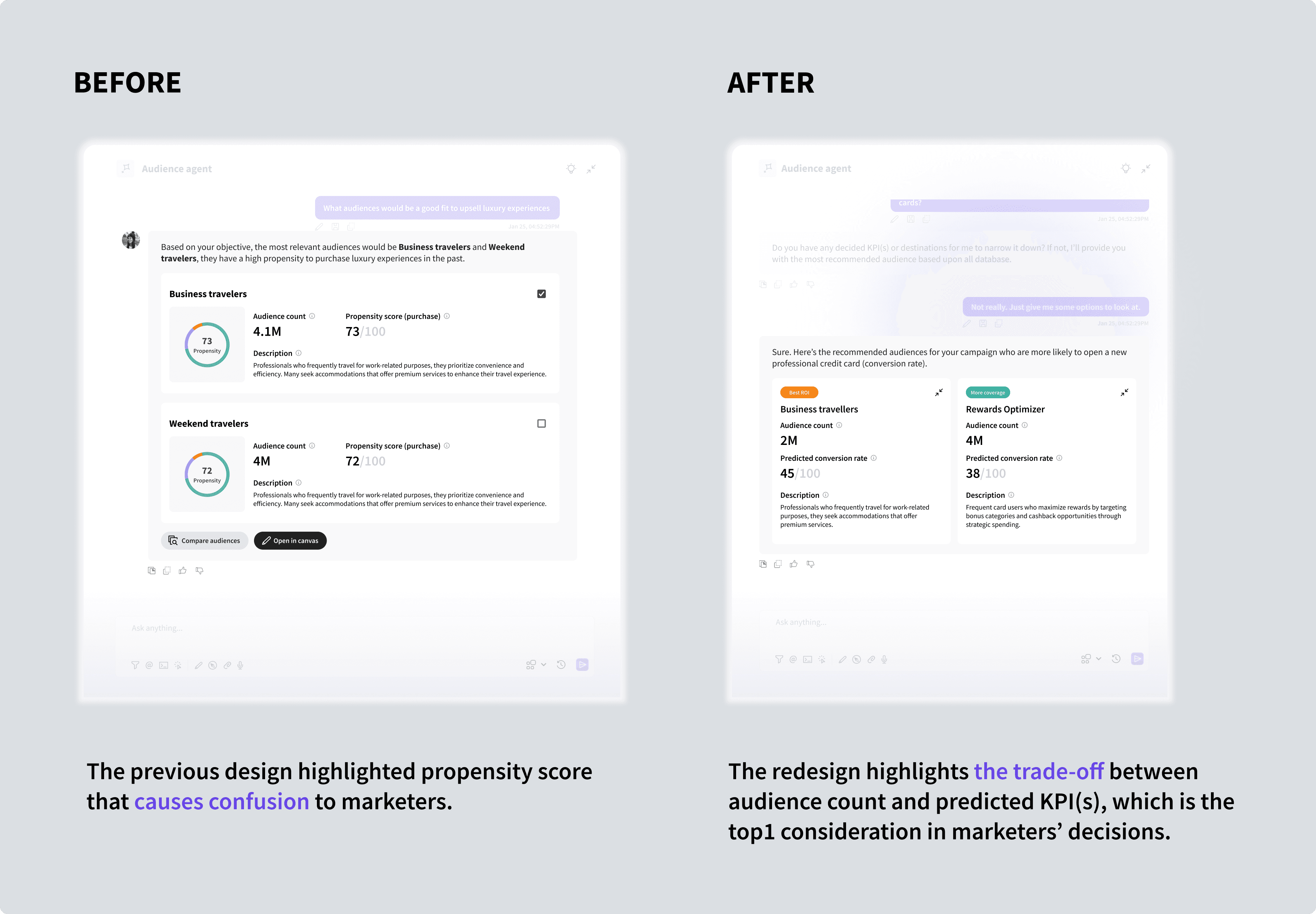

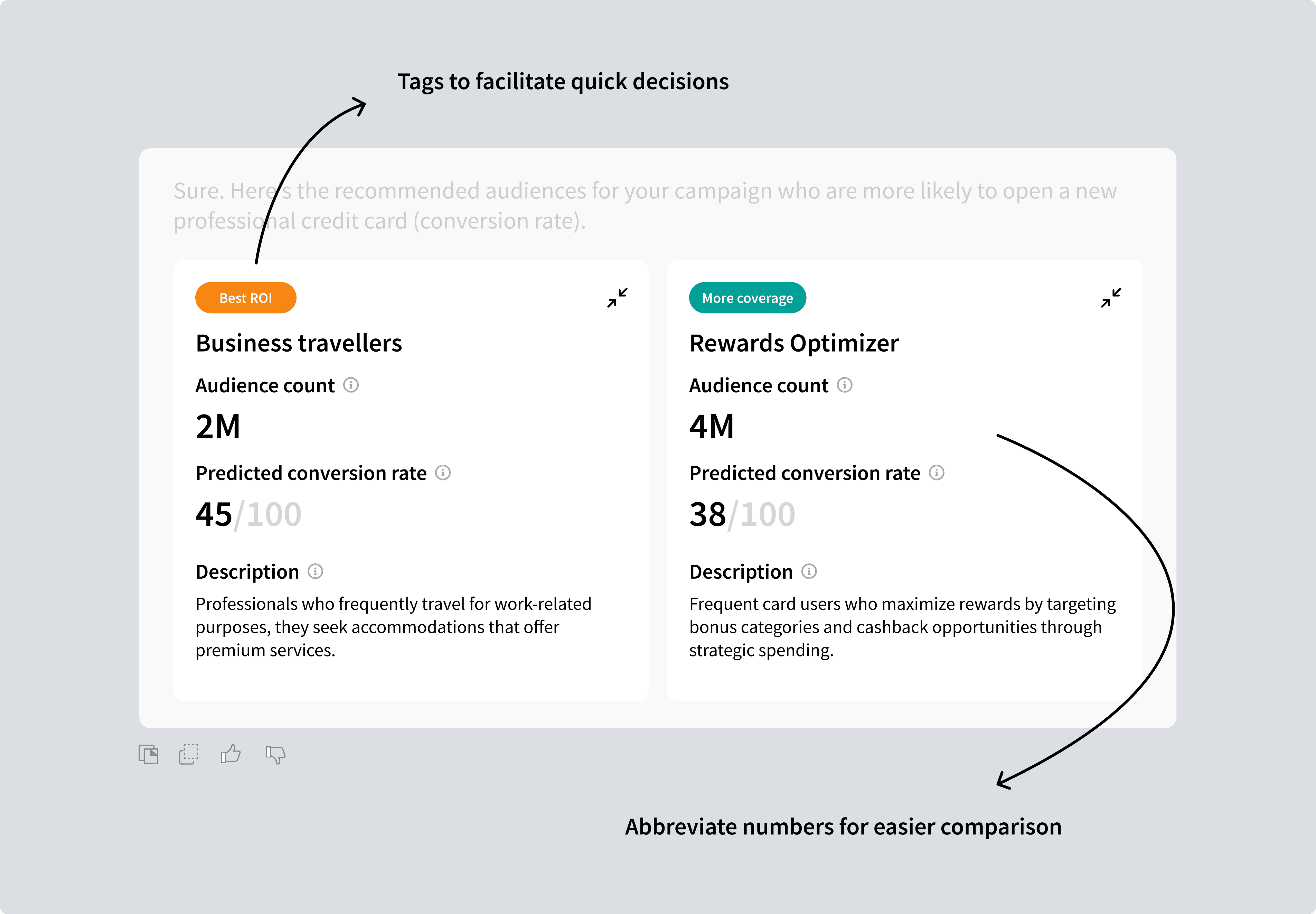

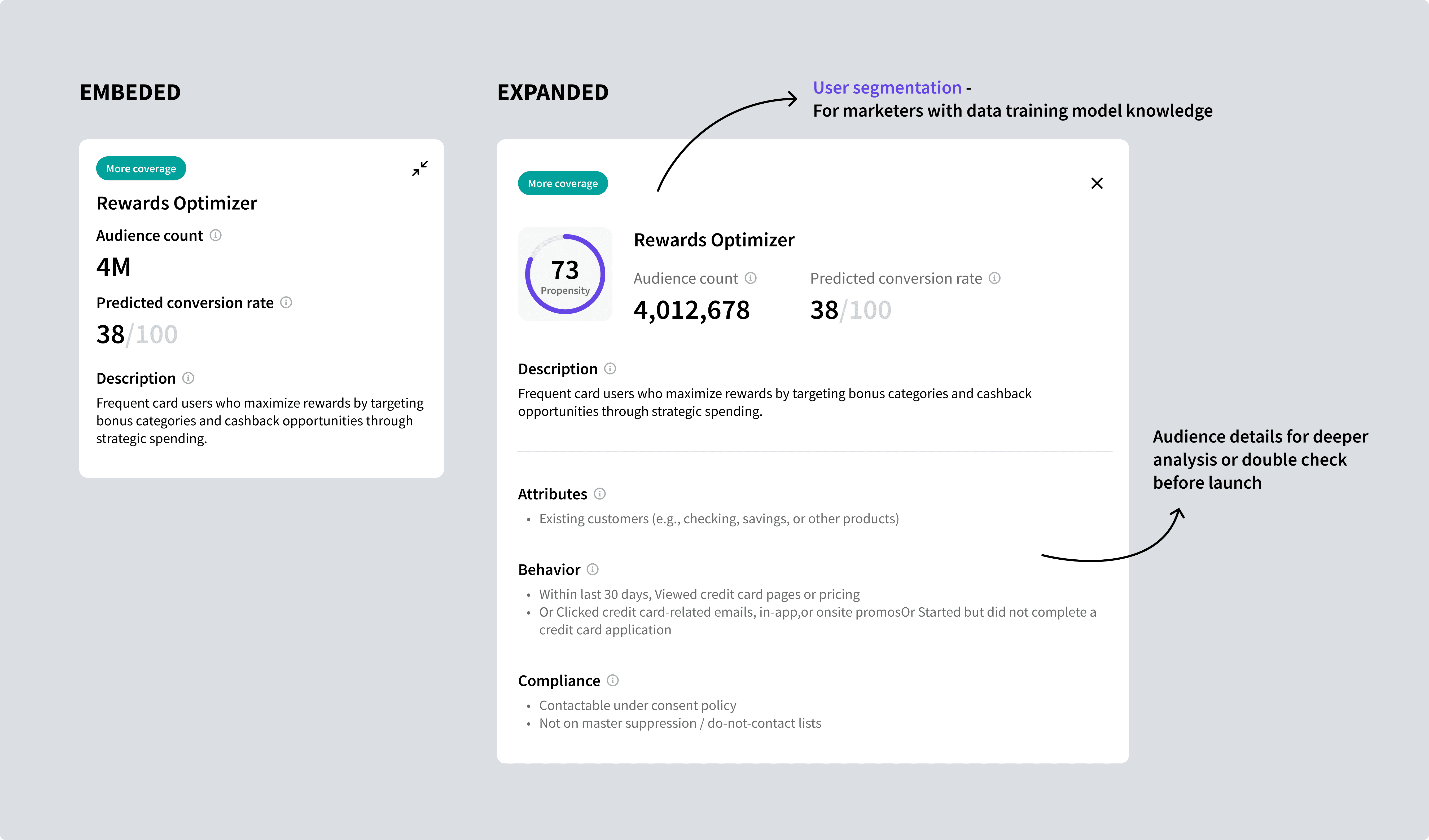

Users cannot anticipate how long the conversation last before they reach a solid answer.Terminology heavy conversation:

AI agents' answers include a lot of terms related to propensity data training model which was supposed to provide more transparency, while many marketers do not have a data background.